Friends,

Let’s talk about something that’s fast becoming the elephant in every marketing war room. Not clicks, not conversions, not content. But trust. Or rather, the erosion of it.

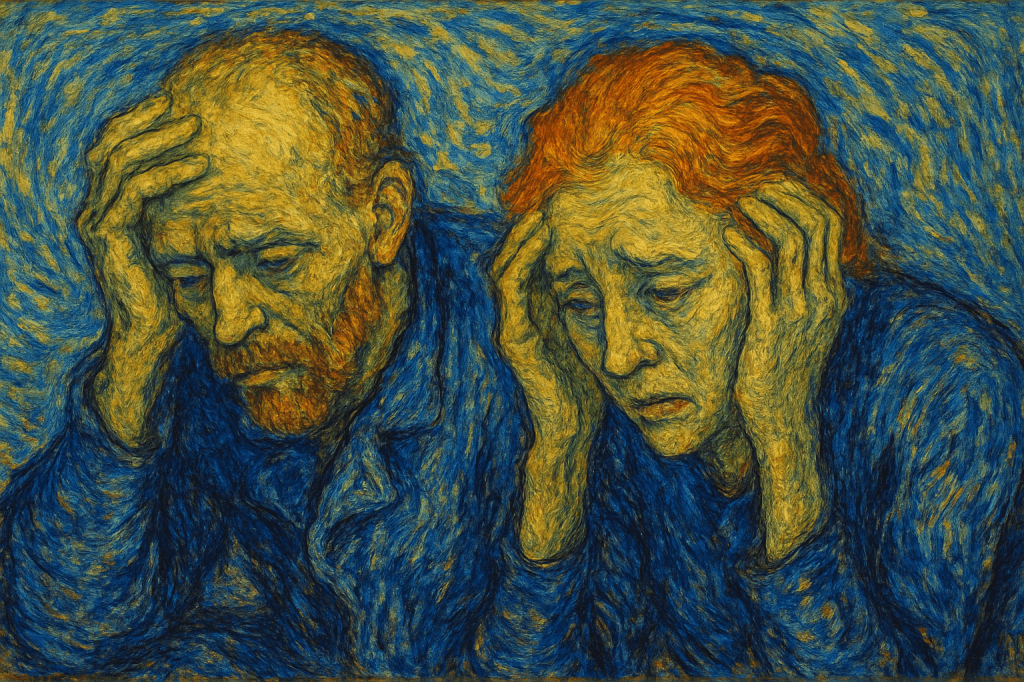

Over the past year, researchers and regulators alike have picked up on a rising consumer sentiment. What they’re calling algorithmic anxiety. It’s the discomfort people feel when AI is involved in decisions that directly affect them, from what products they see to whether they land a job or get approved for a loan. It’s not paranoia. It’s not technophobia. It’s a reflection of how much power algorithms have quietly assumed and how little consumers understand them.

A 2023 report by the Federal Trade Commission points out the depth of these fears. People are increasingly uncomfortable with how their data is collected, stored, and most alarmingly, used to train AI models, often without their explicit consent. And this isn’t just theoretical. Creators are worried that their content is feeding the very models that could replace them. Others are disturbed by the idea of voice recordings during customer support being used to create voiceprint databases. It’s data exploitation dressed up as innovation.

Now layer on this. Most people don’t know how AI decisions are even made. Credit scoring, hiring, medical triage. Many of these rely on AI. But how do these systems evaluate a candidate’s worth or decide if a tumor is benign? It’s a black box. And as Harvard philosopher Michael Sandel warns, objectivity in AI is often just a veil for the biases baked into the data.

No wonder trust is fraying.

And the numbers back it up. In a 2024 Consumer Reports survey, 91% of Americans said they want the right to correct information used by AI systems. Why? Because they fear being judged and denied by algorithms they can’t see or influence. When you strip away the jargon, it’s a basic human fear. Losing control over one’s narrative.

Then there’s bias. Not just in hiring or lending, but in service. A 2024 study in the Journal of Consumer Behaviour found consumers perceive AI-enabled services as less fair and less empathetic, especially in industries where authenticity matters. Hospitality, health, education. This isn’t about tech failing. It’s about tech forgetting the human on the other side.

And here’s the kicker. Mention “AI” in your product descriptions, and you might actually tank your sales. A 2024 study in the Journal of Hospitality Marketing & Management discovered that when products like TVs or cars highlighted their AI features, purchase intent dropped. Not because people hate tech, but because they don’t trust who’s in charge of it.

We’ve seen this play out in broader narratives too. A World Economic Forum report from 2025 shows that consumers are okay with AI recommending a movie. But not managing their money or health decisions. The moment stakes rise, people pull back. Emotionally-driven decisions? Consumers still trust humans. Especially in fashion, food, and beauty, as noted in a 2025 ResearchGate study comparing AI and influencer impact.

So what do we, as marketers, do with all this?

We simplify. We demystify. We build empathy into every AI-driven touchpoint. That means transparency in how decisions are made. It means consumer controls. It means audits for fairness and tools for correction.

If our customers feel they’re being watched but not understood, all the personalization in the world won’t help us win them over.

Because at the end of the day, marketing isn’t about automation. It’s about connection.

And here’s the question we all need to think on. If our brand had to explain every algorithmic decision it made to a real customer, in real time, could it? And would that customer still trust you afterward?

Godspeed,

Ronith Sharmila

Leave a comment